rwf files, PLEASE use the gaussian option to split the file in different chunks. Please take care of cleaning your scratch directory, because it is a common working area, and performance degradation can occurr when it is full. To avoid that, the directive %NoSave specifies that the named scratch files that appear before the %NoSave have to be deleted when the computation ends normally. rwf files, filling the SCRATCH filesystem unnecessarily. Gaussian deletes the unnamed file in case of successful completion, but always keeps the named. rwf file in case of unsuccessful completion, so that one can restart from this file. Gaussian internal policy is that of always saving the. rwf file in the SCRATCH directory you specified, with a name based on the process id (these are considered unnamed files because the user does not provide explicitly a name). Remember to replace path-to-scratch with the actual name of your scratch directory.Įven if you do not specify a name, Gaussian will write a. It can be a huge file and has to be put in the scratch directory. %RWF ( read write file), stores a lot of information about the state of the computation.

%Chk specify the checkpoint file, that is usually saved in the your home directory.

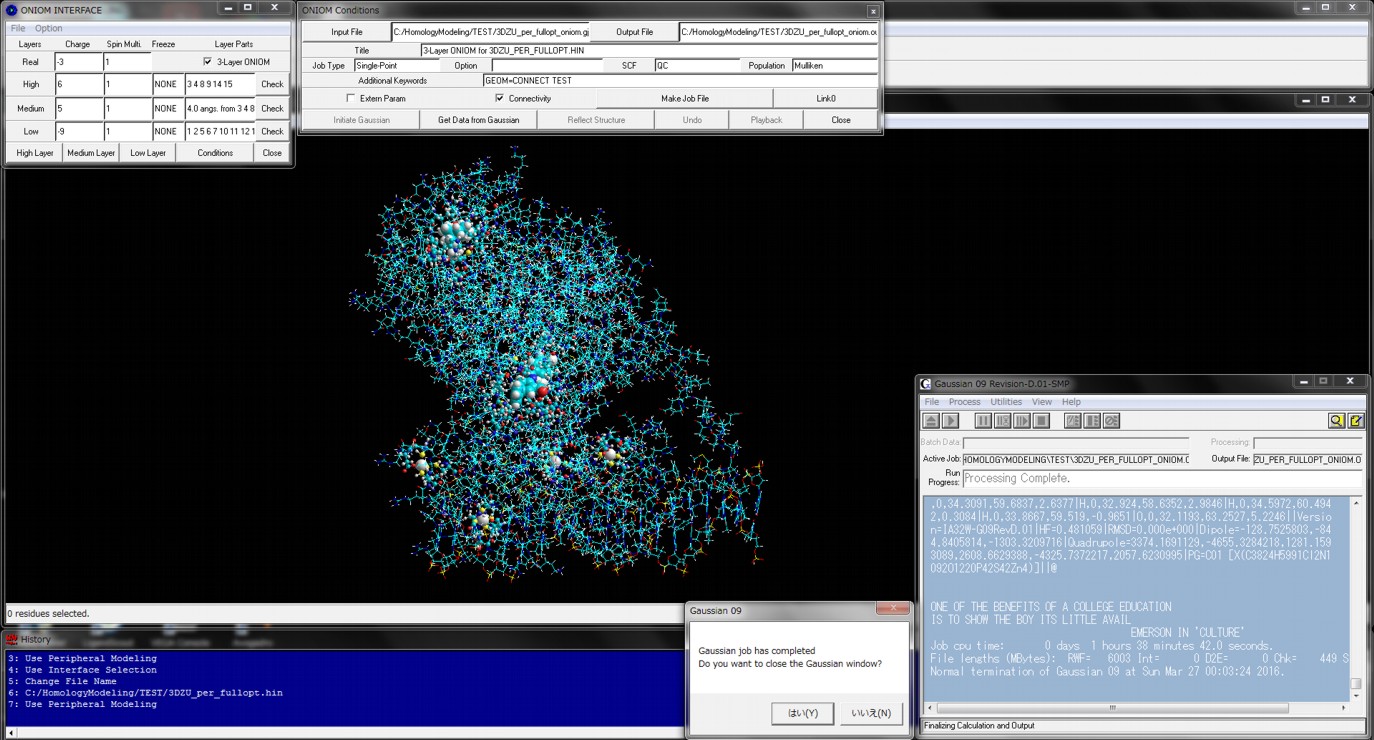

They specify the name and the location of two of the important files that Gaussian can create, and that can be used to restart the calculation. Two other directive are worth mentioning: %Chk and %RWF. Increasing this number is often needed for direct SCF calculations, but requesting more memory than the amount available on the compute node will lead to poor performance. %Mem specifies the total amount of memory to be used by Gaussian. In the Gaussian input file there are other keywords that are important for the execution on the HPC. #export GAUSS_SCRDIR=path-to-scratch, and remove the comment sign “#” at the beginning. With the actual path to your own scratch directory in the line To be able to run Gaussian you have been assigned a scratch directory. The number of cores ( here 4) must be the same set with %NProcShared in the Gaussian input file. The important options here are #BSUB -n 4Ĭombined, they request to reserve 4 cores on 1 node. # - setup of the gaussian 16 environment -Įxport g16root=/appl/hgauss/Gaussian_16_A03 # -o and -e mean append, -oo and -eo mean overwrite. # - specify that the cores MUST BE on a single host! It's a SMP job!. # - specify that we need 2GB of memory per core/slot. # - estimated wall clock time (execution time): hh:mm. # if you want to receive e-mail notifications on a non-default address # please uncomment the following line and put in your e-mail address, # - Notify me by email when execution ends. # - Notify me by email when execution begins. # embedded options to bsub - start with #LSF With %NProcShared=4 you are requesting 4 cores.Ī simple script for running this Gaussian job under LSF could be the following: #!/bin/sh The correct Gaussian directive for specifying the number of cores is %NProcShared. It is important to leave a blank line at the end of a Gaussian input file! A sample Gaussian input file (let’s call it for future references) is shown here: %NProcShared=4 Conflicts could cause problem to the execution of your own job, and to the other HPC users. Gaussian16 has its own syntax for specifying the use of parallel resources and memory, and you have to take care that these specification do not conflict with the resources requested through the scheduler, i.e. You can therefore ask for up to 20, 24 or 32 cores on a single HPC node. This means that only the shared memory version can be run. The latter relies on a proprietary parallel execution environment (Linda), that is not available on the HPC. Gaussian16 comes in two versions, one capable of running on Shared Memory nodes, and one for network parallel execution. If you are going to use it you will probably need to run it in parallel. The latest version installe is Gaussian16. This is achieved by mailing a request containing your username to Kasper Planeta Kepp: can now run Gaussian on the HPC. ask for the creation of your own directory in one of the scratch filesystems.ask to become member of the *gaussian* group.

#Gaussian software job license#

are installed on the HPC cluster, but due to license restrictions you have to become a member of the Gaussian group to be able to run it.

Different versions of the Gaussian-family prograns from Gaussian Inc.

0 kommentar(er)

0 kommentar(er)